If you’re still relying on last-click attribution or vanity metrics to measure your campaign’s success, you’re probably missing the real story.

The truth is, those numbers might look good, but they don’t always tell you what’s actually driving results.

That’s where incrementality testing comes in. It’s the go-to strategy smart brands use to determine what’s truly working and what’s just wasting budget.

Not sure how to leverage incrementality testing yet? Don’t worry, we’ve got you. In this blog, you’ll learn:

- What incrementality testing really means (and why it matters)

- Simple formulas and real-world examples to calculate incremental lift

- The difference between incrementality, A/B testing, MTA, and MMM

- A step-by-step guide to run your own test, start to finish

- Best practices, common mistakes, and ideal channels to test

P.S. Tired of pouring budget into campaigns without knowing what’s actually driving results? At inBeat Agency, we blend performance-driven creative with data-backed strategy, so you get clarity, not confusion. Whether you're scaling paid media or influencer campaigns, we build with incrementality in mind. Book a free strategy call now and let’s make every dollar count.

TL;DR:

What Is Incrementality Testing?

Incrementality testing is a powerful way to figure out the true impact of your marketing efforts. Instead of guessing whether your latest advertising campaign actually drove more sales, incrementality tests help you isolate what would’ve happened without that campaign.

By comparing a control group (who doesn’t see the marketing) with a test group (who does), you uncover the incremental lift, the extra conversions, revenue, or engagement directly caused by your marketing activity. It’s all about understanding the real impact of your marketing spend.

Types of Incremental Effect

Not all marketing efforts lead to a win, and that’s exactly what incrementality testing helps reveal. When you run a test, you’ll typically see one of three outcomes:

- Positive incremental lift: This means your marketing tactic worked! You saw an increase in sales, sign-ups, or conversions that wouldn’t have happened otherwise, proof of true impact.

- Neutral effect: Your campaign didn’t hurt or help much. There was no significant difference between the test and control groups.

- Negative incremental lift: Ouch, your marketing might’ve actually backfired. This shows a decrease in performance, possibly due to poor targeting or overlapping touchpoints.

Understanding these effects gives you actionable insights to optimize future campaigns and budget allocation.

“Incrementality testing has become the industry’s gold standard for understanding advertising’s true impact in a privacy-first way.” JD Ohlinger, Nik Nedyalkov - Think with Google

Example of Incrementality Testing

Shinola, a Detroit-based luxury lifestyle brand, ran into measurement roadblocks after Apple’s App Tracking Transparency (ATT) limited access to user-level data. Suspecting that Facebook was underreporting the effectiveness of their prospecting campaigns, Shinola turned to geo-based incrementality testing for clarity.

They ran a zip-code level geo-matched market test, comparing areas that saw Facebook ads (test group) with those that didn’t (control group). This method allowed Shinola to measure the true impact of their campaigns without relying on platform-reported data or user tracking.

Results:

- The test showed a 14.3% incremental lift in conversions from their Awareness and Dynamic Ads for Broad Audiences (DABA) campaigns. This meant Facebook had been underreporting Shinola’s campaign's performance by a massive 413%.

- These insights empowered Shinola to make smarter budget allocation decisions, doubling down on high-performing advertising efforts and improving overall return on investment.

Now that you know what incrementality testing is and how it works in the real world, let’s talk about why it truly matters:

Why Conducting Incrementality Testing Matters

Just because a campaign looks good on paper doesn’t mean it’s actually driving results. That’s where incrementality testing comes in. It helps you cut through the noise and focus on what actually works.

- Identifies true campaign impact: Instead of guessing, you’ll know which marketing activities are genuinely driving incremental conversions.

- Prevents over-attribution: Platforms love to take credit, even for conversions that would’ve happened anyway. Incrementality testing stops that.

- Improves budget allocation: With a clear view of what’s working, you can shift your marketing spend away from fluff and into proven performers.

- Uncovers hidden opportunities: Some marketing channels fly under the radar in traditional multi-touch attribution models, but this approach reveals their real value.

- Drives smarter decisions: Armed with accurate insights and causal impact data, you can fine-tune campaign performance with confidence.

- Maximizes ROAS and revenue: Why spend more when you can just spend smarter? Incrementality helps you do more with your current marketing budget.

- Builds internal credibility: Whether it’s your boss or the board, now you’ve got the numbers to back up your marketing strategy and prove real, measurable business outcomes.

- Works without cookies or tracking: Since incrementality testing doesn’t rely on third-party cookies or user-level tracking, it’s a future-proof alternative to multi-touch attribution, especially in a privacy-first, post-cookie world.

With the benefits clear, the next step is understanding how to put incrementality testing into motion.

How Does Incrementality Testing Work?

Incrementality testing works by comparing two groups: one that sees your marketing campaign (the test group) and one that doesn’t (the control group). The idea is simple: any difference in performance between the two can be attributed to your marketing efforts. That’s your incremental lift.

To keep things fair and statistically valid, you need randomization. There are a few common ways to do it:

- User-level: Randomly split individual users into test and control groups.

- Geo-level: Run your campaign in specific geographic regions, while keeping others ad-free.

- Time-based: Alternate running and pausing campaigns to create control periods.

Each method has its pros and cons, but the goal is the same: isolate the true impact of your campaign with clean, unbiased comparisons.

How Do You Calculate Incrementality in Marketing?

Once you’ve run your incrementality test, the next step is understanding the true impact of your campaign. To calculate incremental lift, use this formula:

Incrementality = (Test Conversions – Control Conversions) / Control Conversions

This tells you how much better the test group (who saw your ads) performed compared to the control group (who didn’t). The result is a percentage increase or lift, attributed directly to your marketing.

For example, let’s say:

- The control group had 100 conversions

- The test group had 120 conversions

Plug that into the formula:

(120 - 100) / 100 = 0.20 → 20% incremental lift

That means your campaign drove 20% more conversions than would’ve happened without it. That’s your incremental impact.

Incremental Lift vs. Incremental Profit

While incremental lift tells you how effective your campaign was in driving extra actions, incremental profit digs deeper. It factors in your marketing spend and the value of those conversions.

For example:

If you spent $5,000 to get an incremental 20 conversions, and each conversion is worth $300 in profit, your incremental profit is:

(20 x $300) - $5,000 = $1,000 profit

But if those 20 conversions repeat monthly and your $5,000 investment was a one-time cost, your profit increases to $6,000 by month two, $12,000 by month three, and so on—turning a short-term spend into long-term growth.

Pro tip: A campaign can show a great lift, but if it costs too much to generate that lift, it may not be worth it. Always pair lift with profit to make smarter budget decisions.

Confidence Intervals & P-Values

It’s not enough to just see a lift; you need to know if it’s statistically significant. That’s where confidence intervals and p-values come in.

- Confidence interval: This gives you a range where the true lift likely falls. A 95% confidence interval means you can be 95% sure the actual lift is within that range.

- P-value: This tells you how likely it is that your results happened by chance. A p-value below 0.05 typically means your results are statistically significant, not just random.

In short:

- A low p-value = you can trust your result

- A tight confidence interval = you’ve got precision

These statistical techniques are key to making sure your insights are accurate and actionable, not just happy accidents.

Understanding the math is one thing; choosing the right testing approach is the next. Let’s look at the main types of incrementality testing.

Incrementality Testing Types

There’s more than one way to run an incrementality test, and the method you choose depends on your goals, audience size, and available data.

Let’s break down the most common types and what makes each one unique:

1. Holdout Group Testing

This is one of the most straightforward and widely used incrementality testing methods. You simply split your audience into two groups: one sees the campaign (test group), and the other doesn’t (holdout or control group). Comparing their behaviors, like conversion rates or sales, lets you measure the incremental lift your campaign truly generated.

2. Geo-Based Testing

Geo-based testing involves running your campaign in specific geographic regions (the test group) while keeping other similar regions ad-free (the control group). It’s a great option when user-level tracking isn’t available, perfect for a post-cookie world.

Comparing conversion lift across regions is how you measure the incremental impact of your campaign without needing personal data. Just make sure the regions are comparable in terms of baseline sales, demographics, and marketing activity to get clean, reliable results.

3. Intent-To-Treat (ITT) Testing

Intent-To-Treat (ITT) testing measures the impact of a campaign based on who was intended to receive the treatment, regardless of whether they actually engaged with it. For example, if you target a group with an ad but some never see it due to ad blockers or skipped placements, ITT still counts them in the test group.

This method gives you a more realistic view of incremental impact in real-world conditions, where not everyone behaves as expected, and avoids bias from only looking at engaged users.

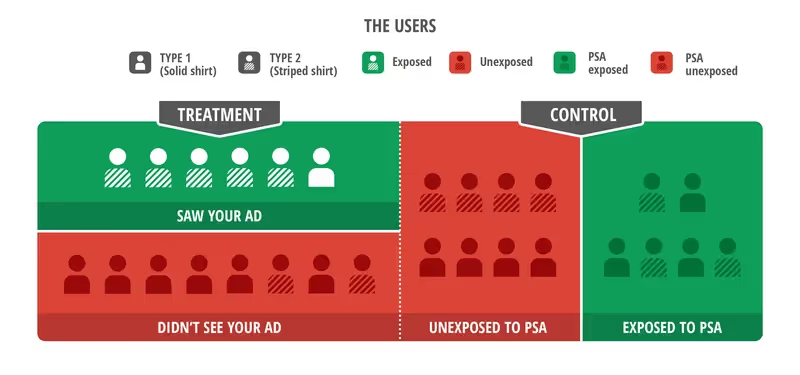

4. Ghost Ads / PSA Testing

Ghost Ads (also known as PSA or Public Service Announcement testing) is a clever method used mostly in digital platforms. Here, users in the control group are shown placeholder ads, like public service announcements, instead of your actual campaign ads.

Since both groups experience the same number of ad impressions, this controls for the effect of simply seeing an ad, helping you isolate the true causal impact of your advertising efforts.

5. Time-Based (Pre/Post) Testing

Time-based testing compares performance before and after a marketing campaign to estimate its incremental impact. You track key metrics, like conversion rates, sales, or customer acquisition, during a baseline period (pre-campaign) and then measure again during the campaign (post-campaign).

The difference helps estimate incremental lift. While it’s simple to execute, it can be tricky to control for external factors like seasonality or market trends.

6. Multivariate Testing

Multivariate testing takes things a step further by testing multiple variables, like different ad creatives, messaging, or marketing channels, simultaneously to see which combination drives the highest incremental lift.

Unlike A/B testing, which isolates one change at a time, this method reveals how different elements interact to influence outcomes.

7. Matched Market Testing

Matched market testing compares two or more geographic regions that are demographically and behaviorally similar, one exposed to your campaign (test) and one not (control). By carefully pairing these markets, you can measure the incremental return of your marketing activity while controlling for outside variables like seasonality or regional trends.

How to Run Incrementality Test: Step-by-Step Guide

You’ve got the theory, now it’s time to execute. inBeat experts have shared a simple step-by-step guide to help you run a test that delivers real, measurable insights.

1. Set Business Goals

Before diving into data or designing test groups, start by getting super clear on what you want to learn. Are you trying to:

- Prove the value of a new marketing channel?

- Optimize your budget allocation?

- Measure the incremental lift of a specific advertising campaign?

Defining your goals upfront keeps your test focused and meaningful.

In fact, teams achieve 20-25% higher performance when they have clear, challenging goals rather than vague or undefined ones.

Think in terms of business outcomes, like increasing incremental revenue, improving return on investment (ROI), or validating the impact of a marketing tactic.

The more specific your goal, the easier it’ll be to measure success and translate insights into actionable strategies.

Pro Tip: We suggest using SMART (Specific, Measurable, Achievable, Relevant, Time-bound) goal-setting framework to avoid vague objectives. Tools like Notion or ClickUp can help you map goals to KPIs and timelines.

2. Choose The Right Method

Once your goals are clear, it’s time to pick the testing method that fits best.

- Are you working with regional campaigns? Then geo-based testing might be your move

- Running digital ads on Meta or Google? Consider ghost ads or holdout testing.

- Don’t have access to user-level data? Matched market or time-based testing can still get the job done.

The key is to match the method to your campaign setup, data availability, and how precise you want your incremental insights to be.

There’s no one-size-fits-all; just choose the approach that aligns with your marketing strategy and testing constraints.

3. Segment The Audience

Not all customers are created equal, and that’s exactly why audience segmentation matters.

Research shows that around 80% of companies that use market segmentation report increased sales. This shows just how powerful smart segmentation can be.

To get accurate insights, you’ll need to divide your audience in a way that makes sense for your campaign.

You can segment by lifetime value (LTV), past purchase behavior, geographic region, or even by where they are in the customer journey.

Why? Because comparing apples to apples leads to clearer results.

For example, if you're testing a premium product, it makes more sense to focus on high-LTV segments. Or, if you’re running local ads, segmenting by region keeps your control group and test group balanced.

Smart segmentation = cleaner data, stronger insights, and a better read on your campaign’s true impact.

Pro Tip: Platforms like Twilo Segment or Amplitude can help you build advanced audience segments based on behavioral data, LTV, and engagement to ensure smarter control vs. test group allocation.

4. Avoid Test Contamination

Test contamination can ruin your results without you even realizing it. Contamination happens when your control group is accidentally exposed to your marketing activity, making it harder to see the real difference in performance between the test and control groups.

For example, if you’re running a geo-based test but people in your control region are still seeing your ads online or hearing about the campaign through word of mouth, your results can get blurry. The same goes for overlapping campaigns or retargeting settings that don’t exclude control audiences.

The fix? Be intentional. Use clean segmentation, exclude overlapping audiences, and work with tools or platforms that allow proper test group isolation.

Pro Tip: Use geo-fencing tools (like in Google Ads or Facebook’s location targeting) to tightly control ad delivery boundaries and prevent test spillover. Also, double-check for retargeting settings that might blur your group lines.

5. Define KPIs

Before you hit “go” on any test, you need to be crystal clear on what success looks like. Are you aiming for more incremental conversions? Higher incremental revenue? Better return on investment?

Picking the right key performance indicators (KPIs) upfront ensures you’re measuring what actually matters.

In fact, businesses that define clear KPIs are three times more likely to achieve their goals.

Common KPIs in incrementality testing include:

6. Run The Test For a Fixed Duration

Once everything’s set up, it’s go time. But don’t just let your test run indefinitely.

Set a fixed testing period upfront, long enough to gather meaningful data but short enough to avoid outside variables like seasonal changes or overlapping campaigns.

Most tests run anywhere from 2 to 6 weeks, depending on your audience size and conversion volume.

Sticking to a clear timeline helps you reach statistical significance faster and keeps your results clean and actionable.

Remember, the goal isn’t to run it forever; it’s to get accurate insights that you can use to improve future campaigns.

7. Analyze & Iterate

Once your test wraps up, it’s time to dig into the results. Look at your incremental lift, revenue impact, and those all-important KPIs you set earlier.

Did the campaign actually move the needle? Was the lift statistically significant? This is where incrementality testing's real value comes through turning data into actionable insights.

But don’t stop there.

Use what you’ve learned to optimize future campaigns, adjust your budget allocation, and maybe even test a new channel or strategy.

Incrementality testing isn’t a one-and-done deal; it’s a cycle of learning and improving to get the most out of your marketing spend.

Pro Tip: At inBeat, we don’t just report outcomes, we always create a “What We Learned” slide. We highlight at least one insight that can be applied to future campaigns.

With the testing process laid out, the next big question is: where should you apply it? Let’s explore which marketing channels are best suited for incrementality testing.

Which Channels Are Ideal for Incrementality Testing?

Not every channel makes it easy to see what’s truly working, but some are perfect playgrounds for incrementality testing. These channels let you clearly separate test from control, track performance, and measure real lift without the noise.

- Paid social: Platforms like Meta, TikTok, and LinkedIn are ideal for testing because of their robust targeting options. You can easily split audiences and measure the incremental impact of your ads.

- Search (branded vs. non-branded): Want to know if people would’ve found you anyway? Testing branded vs. non-branded keywords helps you uncover whether your marketing spend is actually driving incremental conversions or just capturing existing demand.

- Programmatic display: With geo-level targeting and flexible segmentation, display ads are great for running geo-based or matched-market incrementality tests.

- Email: Email campaigns are perfect for holdout testing. Just exclude a portion of your list and compare performance. It's a low-cost, high-impact channel to test.

- CTV/OTT (Connected TV/Over-the-Top): Streaming platforms allow for regional targeting, which makes them ideal for geo-based incrementality tests, especially when measuring cross-device impact.

Best Practices for Accurate Incrementality Testing

Getting incrementality testing right isn’t just about choosing a method; it’s also about execution. Here are some best practices to make sure your data is clean and your insights are solid:

1. Avoid Overlapping Users

Make sure your test and control groups are totally separate. If someone ends up in both groups through retargeting or multi-channel overlap, it can skew your results and muddy the real impact.

2. Watch for Seasonality & Promotions

Running your test during a high-traffic holiday or a big promotional push can inflate performance and distort your findings. Try to test during a “normal” business period, or if that’s not possible, make sure you factor those influences into your analysis.

3. Sample Size Matters

You don’t need millions of users, but you do need enough data to draw a confident conclusion. Use a sample size calculator like the one from Evan Miller to estimate what’s “enough” based on your expected conversion rates and desired confidence level.

For example, if your control group typically converts at 5%, and you want to detect a 10% lift with 95% confidence and 80% statistical power, you’d need around 6,200 users per group. The higher your expected lift or baseline conversion rate, the smaller the required sample.

Common Mistakes to Avoid in Incrementality Testing

Even with the right setup, some easy-to-make mistakes can throw off your results. Avoid these to keep your test clean and your conclusions bulletproof:

- Over-attributing based on last-click data: Last-touch attribution often overstates impact. Incrementality is about causal impact, not just who gets the final credit.

- Cutting tests short: Ending a test too early can lead to inconclusive or misleading results. Let it run until you hit statistical significance.

- Interpreting “neutral” lift incorrectly: No lift doesn’t always mean your campaign failed; it might mean your audience would’ve converted anyway, or that your control group wasn't properly isolated.

- Using poor segmentation: If your audience isn’t segmented cleanly (e.g., mixing geographies or behaviors), you risk comparing apples to oranges and getting bad data in return.

Incrementality vs. Other Measurement Models

You’ve got the do’s and don’ts down, now let’s compare incrementality testing to other common ways marketers measure success.

Incrementality Testing vs A/B Testing

A/B testing focuses on comparing two versions of a specific marketing element, like a headline or CTA, to see which one performs better. It’s great for optimizing user engagement and improving performance within a single campaign element. But it assumes your marketing activity is already having an effect.

Incrementality testing, on the other hand, goes deeper. It measures the actual contribution of a campaign to your business outcomes, whether it truly drove additional conversions, app installs, or sales that wouldn’t have happened otherwise. Instead of comparing versions, it compares results between a group exposed to your campaign and a group that wasn’t.

In other words, A/B testing shows which version works better, while incrementality testing shows if your marketing efforts are truly making a difference.

Read Next: How Are Incrementality Experiments Different From A/B Experiments?

Incrementality Testing vs Multi-Touch Attribution (MTA)

Multi-touch attribution (MTA) assigns credit to multiple marketing touchpoints along the customer journey to help you understand which channels influenced a conversion. But here’s the catch: MTA shows correlation, not causation. Just because a user interacted with an ad doesn’t mean that ad drove the conversion.

Incrementality testing takes it a step further by measuring the causal impact of your marketing. It isolates what would’ve happened without the campaign, revealing the true lift your efforts generated.

In short, MTA tells you where credit is shared, while incrementality tells you if credit is even deserved.

Incrementality Testing vs Marketing Mix Modeling (MMM)

Marketing Mix Modeling (MMM) takes a high-level, statistical approach to measure the impact of different marketing channels over time, often using historical data and broader trends. It’s great for long-term planning and budget allocation, especially across offline and online channels. But MMM can be slow, less granular, and harder to apply in fast-paced digital environments.

Incrementality testing, on the other hand, is more agile and experiment-driven. It provides real-time, campaign-specific insights by comparing exposed vs. unexposed groups to measure true impact.

Think of MMM as your long-range radar, while incrementality testing is your precision lens for real-time decisions.

Want to See What’s Actually Driving Results? Let inBeat Agency Help

Incrementality testing is a smarter, more honest way to measure what’s truly working. It cuts through inflated metrics and assumptions to give you clear, actionable insights into the real impact of your campaigns.

Whether you're optimizing paid media, influencer collaborations, or full-funnel strategies, knowing your incremental lift puts you in control of your budget, your message, and your growth.

Key Takeaways:

- Incrementality testing helps measure the true causal impact of marketing efforts.

- It compares a test group (exposed) to a control group (unexposed).

- Common testing methods include holdout groups, geo-based, ghost ads, and ITT.

- Incremental lift is calculated using the difference in conversions between groups.

- Incrementality differs from A/B testing, which only compares variations, not overall impact.

- Best practices include clean segmentation, fixed duration, and avoiding overlap.

- Channels like paid social, email, CTV, and search are ideal for incremental testing.

If you're looking to launch campaigns that drive real, measurable growth, not just nice-looking dashboards, inBeat Agency can assist. Our team blends data-driven creative with testing strategies like incrementality to ensure every campaign delivers true value.

Book a free strategy call and let’s build campaigns that move the needle!

FAQs

What is the meaning of incrementality testing?

Incrementality testing is a method used to measure the true impact of a marketing campaign. It helps you understand how many conversions (or other outcomes) were actually caused by your marketing, by comparing a group that saw the campaign (test group) to one that didn’t (control group). It answers the key question: Would these results have happened without the campaign?

How to do incrementality testing?

To run an incrementality test, you split your audience into a test group (exposed to your marketing) and a control group (not exposed). After the campaign runs for a fixed duration, you compare outcomes like conversions or sales between the two groups. The difference is your incremental lift.

What is the difference between A/B testing and incrementality testing?

A/B testing compares two versions of something (like two ads or emails) to see which performs better. It assumes your campaign already has an impact. Incrementality testing goes a step further; it measures whether your marketing caused a result that wouldn’t have happened otherwise. A/B shows what works better; incrementality shows if it works at all.

How is incrementality calculated?

The most common formula is: “(Test Group Conversions – Control Group Conversions) / Control Group Conversions”. This gives you a percentage known as incremental lift, the added impact directly attributed to your marketing activity.

What is an example of incrementality?

Let’s say you're running Facebook ads. You show them to one group (test) and hold them back from another (control). At the end of the test, the test group had 120 conversions, while the control group had 100. That means your campaign drove an incremental lift of 20%; those extra conversions wouldn’t have happened without the ads.

.svg)

.svg)

.svg)

.svg)

.svg)